Stable Visual ML Systems for Real-World Environments

We design and adapt visual machine learning systems that remain reliable under outdoor, low-visibility, and harsh operating conditions.

Why Visual ML Fails After Deployment

Outdoor and harsh-environment systems operate under seasonal shifts, low contrast, motion blur, and ambiguous visual boundaries.

A model that works in controlled tests often becomes unstable once deployed. In real systems, perception influences downstream behavior — instability propagates.

Reliability must be engineered during preparation, not fixed after deployment.

Environment-Aligned Preparation

Align training data with real operating conditions and evaluate perception behavior under motion, transitions, and seasonal change.

Stability Engineering

Reduce flicker, improve temporal consistency, and structure the transition from prototype performance to field reliability.

Deployment Alignment

Structure runtime evaluation, compare perception strategies, and validate stability before field deployment — including on edge devices.

Visual ML for Harsh and Restricted Environments

We specialize in visual machine learning for environments where perception is difficult by default and public datasets or benchmarks are insufficient.

This includes outdoor, night-time, and low-visibility operation, as well as infrared and multi-spectral vision, where motion, environmental variability, and sensor limitations dominate system behavior.

Much of this work supports non-public or access-restricted deployments. Reliability and stability are therefore treated as primary engineering constraints from the start.

AGVScanner — Free Field Evaluation & Runtime Framework

AGVScanner is a free perception runtime and validation framework designed for real operating conditions. It allows teams to evaluate visual ML behavior directly in the field — using live cameras, recorded streams, or robotic middleware — rather than relying solely on offline benchmarks.

It supports user-defined models as well as selected perception models provided with the framework. Some included models are restricted for use within AGVScanner only, reflecting their role in evaluation and validation rather than standalone deployment.

AGVScanner can be integrated into end-user systems and supports input from ROS, camera streams, and video sources. In addition to visual outputs, it can publish results via ROS messages, MAVLink, and MQTT.

It can be used as a validation tool and as an on-device runtime component during field testing, enabling early detection of instability and misalignment before full deployment.

Who we work with

Teams building products that rely on visual ML in real operating environments, including:

- Outdoor robotics and autonomous platforms

- Infrastructure, inspection, and monitoring systems

- Industrial and construction environments

- Harsh, low-visibility, or access-restricted operating contexts

Field Insights

Deployment Risk Starts in ML Preparation (2/18/2026) - Most outdoor robotics programs do not fail at inference time. They fail during ML preparation. Benchmark accuracy may look acceptable but operational…

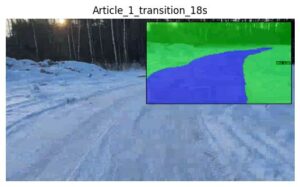

Deployment Risk Starts in ML Preparation (2/18/2026) - Most outdoor robotics programs do not fail at inference time. They fail during ML preparation. Benchmark accuracy may look acceptable but operational…  Real-time segmentation in outdoor environments (2/16/2026) - What this demo demonstrates Live segmentation and classification running on NVIDIA Holoscan Deterministic low-latency processing on edge hardware Stable operation with real…

Real-time segmentation in outdoor environments (2/16/2026) - What this demo demonstrates Live segmentation and classification running on NVIDIA Holoscan Deterministic low-latency processing on edge hardware Stable operation with real…  AI-based granite vs dolomite sorting (2/16/2026) - Business background In the Baltics, post–ice age geology has left large volumes of mixed, oval-shaped rocks Two common materials are dolomite and granite, often similar…

AI-based granite vs dolomite sorting (2/16/2026) - Business background In the Baltics, post–ice age geology has left large volumes of mixed, oval-shaped rocks Two common materials are dolomite and granite, often similar…

If your system works in demo conditions but struggles in real environments, deployment risk has already begun.

It’s time to structure visual ML properly.